Are you using the Kirkpatrick framework for learning evaluation? While there are alternatives to Kirkpatrick, it is the most familiar and widely used learning evaluation framework. For this reason, improving how it is used will help with a general uplift of our industry-wide approach to evaluation. While familiar and deceptively simple, the Kirkpatrick framework is often implemented hastily and without enough thought about good practice. Improving evaluation practices is a high-leverage way to enhance the effectiveness and impact of your learning solutions.

In early 2024, I shared some observations on LinkedIn about the most common traps and issues we see people fall into when using the Kirkpatrick framework. The post sparked a lot of conversation and interest – in fact, it generated the most interaction of any post I’ve ever made on LinkedIn. Given this level of interest, I thought it worth expanding on these insights and offering some suggestions for how to address these common challenges.

Let’s explore common traps in using the Kirkpatrick framework and how to address them.*

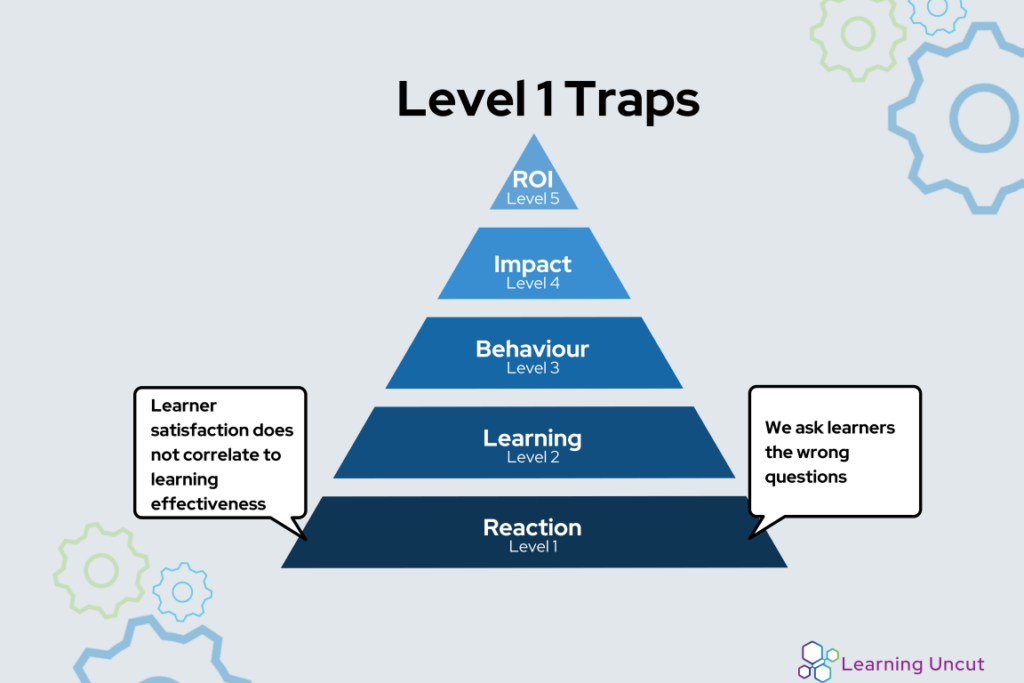

Reaction: Level 1

Common Traps:

- Asking learners questions that they don’t have the expertise to answer (e.g., “How did the course structure support your learning?”)

- Focusing on low-priority questions (e.g., quality of food)

- Relying too heavily on learner satisfaction and NPS as indicators of learning quality – learning can sometimes be uncomfortable

Try Instead:

- Ask about factors correlated with learning effectiveness: comprehension, attention, and realistic practice

- Focus on questions that learners can meaningfully answer based on their experience

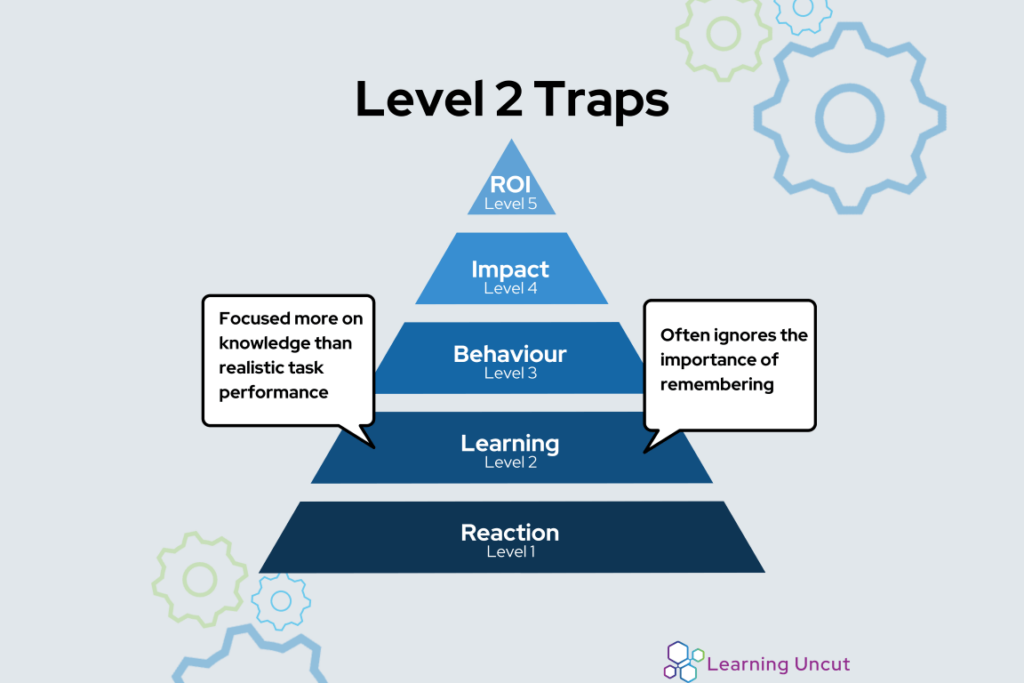

Learning: Level 2

Common Traps:

- Focusing on knowledge acquisition rather than practical application

- Overlooking long-term retention in favour of short-term knowledge gains

- Testing immediately after training, which does not indicate retention

Try Instead:

- Reinforce and test recall using a spaced repetition approach

- Focus on practical task performance rather than just theoretical knowledge

- Assess ability to apply learning on the job and/or to realistic scenarios 2-3 days post-training

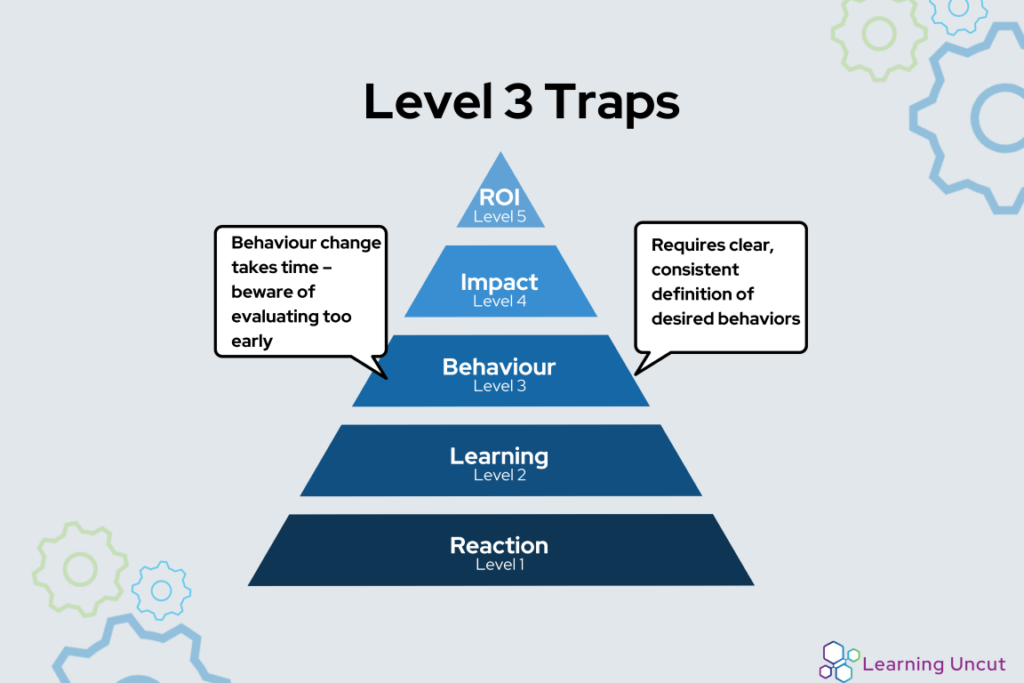

Behaviour: Level 3

Common Traps:

- Evaluating behaviour change too early, failing to account for the time it takes for new behaviours to become habitual

- Lack of clear, consistent definitions of desired behaviours

Try Instead:

- Allow 8-12 weeks post-training for observable and measurable behaviour change (as recommended by Emma Weber in Turning Learning Into Action)

- Identify target behaviours upfront during the performance consulting process

- Ensure all stakeholders have a shared understanding of what behaviour change looks like

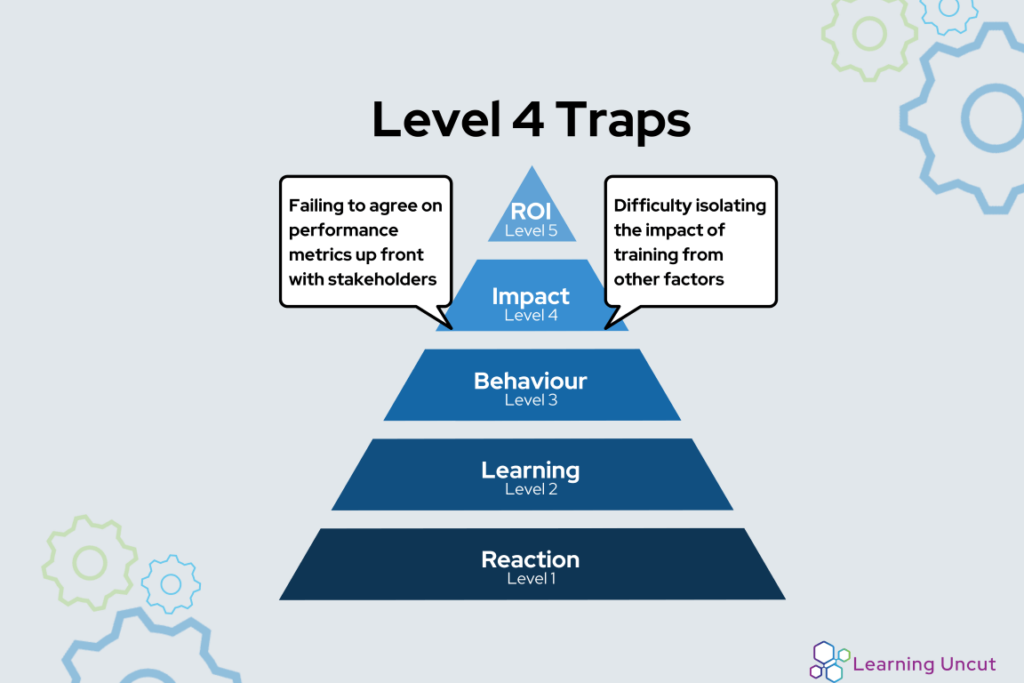

Impact: Level 4

Common Traps:

- Failing to agree on performance metrics upfront, leading to subjective and inconsistent measurement

- Difficulty isolating the impact of training from other factors influencing performance

Try Instead:

- Identify and agree on relevant performance metrics up-front using a performance consulting process

- Instead of seeking an unachievable level of ‘statistical validity’ aim for “roughly reasonable” standards using business performance data

- Use this data in combination with learning data to have better conversations with stakeholders about improving (rather than proving) learning initiative impact

For more on embracing an improvement mindset in L&D evaluation, read my recent blog post on this topic.

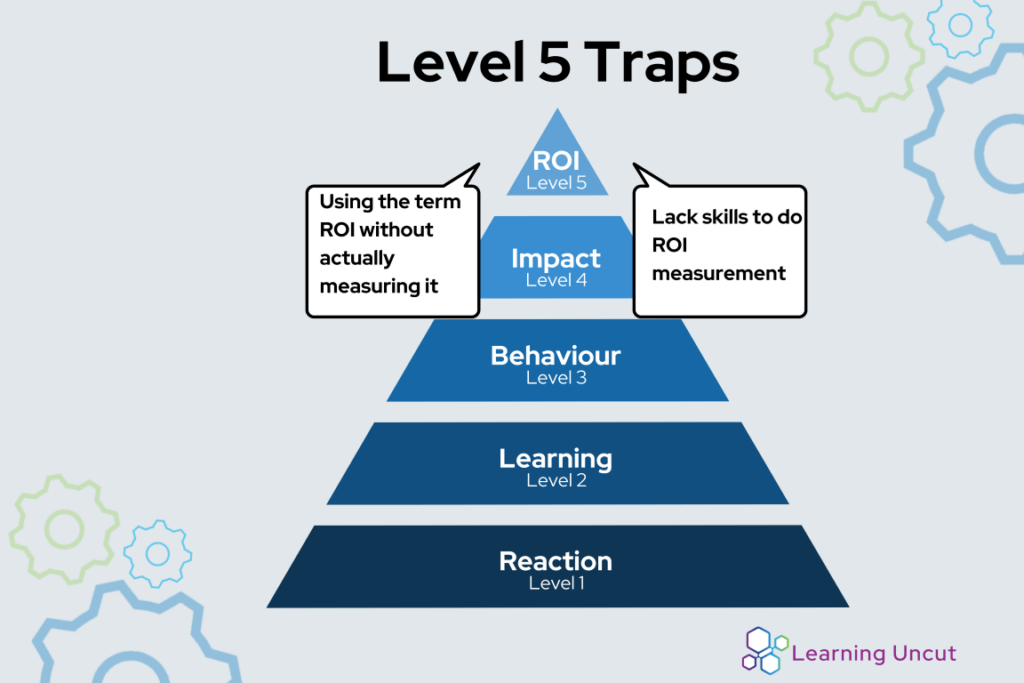

ROI: Level 5

Common Traps:

- Using the term ROI without actual measurement, undermining credibility

- Lack of skills among L&D professionals to measure ROI effectively

Try Instead:

- Just stop! Focus on improving evaluation at levels 3 and 4 instead.

Ajay M. Pangarkar has written extensively about the challenges of measuring training ROI. He argues that L&D is a cost centre, and we should focus on evaluating its effectiveness through the value it delivers to improving profit-focused activities. As he states:

“…how do you assess the viability for your training efforts if it’s not applying training ROI? First, embrace that training is a cost and evaluating its effectiveness arrives through the value it delivers to improving profit-focused activities. This causal relationship is the basis for evaluating impact.”

For more on this perspective, read Pangarkar’s article The Myth of Training ROI.

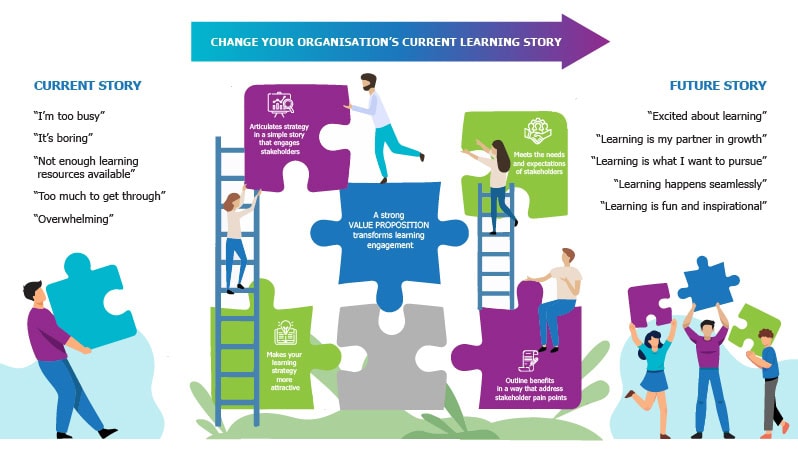

Moving Forward

Your stakeholders may not be asking for evaluation beyond Level 1 – or at all. It’s up to you to shift the conversation and your approach higher up the Kirkpatrick levels. As Kat Koppett pointed out in response to my LinkedIn post, many L&D teams are incentiviesd to focus solely on Level 1 survey responses. Breaking this cycle is crucial for demonstrating true value.

Evaluation is critical for continuously improving the effectiveness and impact of your L&D work. Start by addressing these common pitfalls and engaging with expert insights to develop a more effective evaluation strategy.

Ready to enhance your learning evaluation practices? Let’s discuss how we can start this journey together. Book a call to discuss how Learning Uncut can help you improve learning evaluation.

Read the original LinkedIn post that sparked this discussion and join the conversation on learning evaluation practices.

* I’d like to acknowledge the influence that Dr Will Thalheimer’s research and work on learning evaluation has had on my thinking and work on this topic in recent years. You will find this reflected in the traps or tips, some of which I have sourced and/or adapted from Thalheimer’s writing and several discussions I’ve had with him. While I haven’t specifically attributed content from this article I want to acknowledge and refer the reader to two sources for more Thalheimer’s detailed analysis and explanation of issues with the use of Kirkpatrick. The first is The Learning-Transfer Evaluation Model report. The second is his book, Performance-Focused Learner Surveys.